Contributors:

Mariana Shimabukuro, Deval Panchal, Shawn Yama, and Christopher Collins

What is LangEye?

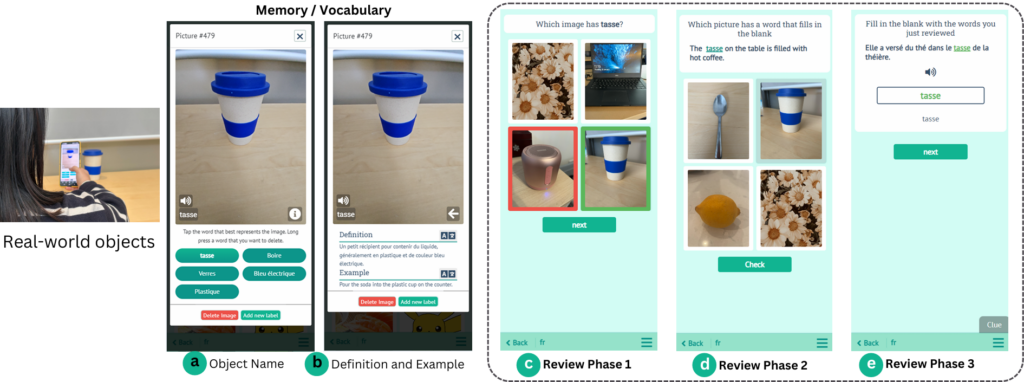

LangEye is a mobile application that empowers language learners to build vocabulary through real-world interactions. By using their smartphone cameras, learners capture objects from their surroundings, and LangEye transforms these into personalized vocabulary “memories” enriched with definitions, example sentences, and pronunciation, powered by computer vision, large language models, and machine translation.

The app features a structured review system that progresses from picture recognition to free recall, promoting contextual and self-directed learning. An exploratory study with French learners demonstrated that learner-curated content can enhance motivation and engagement, while also revealing challenges in AI-generated feedback and adaptive design.

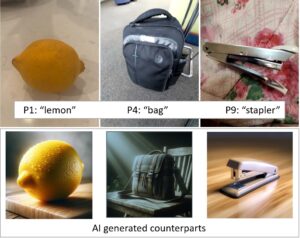

Notably, the study included a comparison between participants who used their own photographs and those who interacted with AI-generated images representing the same vocabulary items (see Image X). While both groups found the app usable, participants who captured their own photos more often described the experience as engaging and personally meaningful. These preliminary findings suggest that allowing learners to curate their own visual content may enhance contextual relevance and motivation, though further studies are needed to assess the long-term impact on vocabulary acquisition.

How was LangEye created?

LangEye was developed as part of Dr. Mariana Shimabukuro’s PhD research at Ontario Tech University, under the supervision of Dr. Christopher Collins. The project benefited greatly from the contributions of our alumni Deval Panchal, who implemented the app as a research assistant and leading developer, and Shawn Yama, who contributed to the early UI/UX design of the application.

To be presented at the ACL 2025 BEA Workshop on Innovative Use of NLP for Educational Applications in Vienna, Austria.

Try LangEye Yourself: DEMO

You can try out the demo at by logging in using test@email.com and Password: b09e80 at the link: https://llar.apps.science.ontariotechu.ca/

Video Presentation

Publications

-

Shimabukuro, M., Panchal, D., & Collins, C. (2025). LangEye: Toward ‘Anytime’ Learner-Driven Vocabulary Learning From Real-World Objects. In Proceedings of the ACL 2025 BEA Workshop on Innovative Use of NLP for Educational Applications.

@inproceedings{Shimabukuro2025LangEye,

author = {Shimabukuro, Mariana and Panchal, Deval and Collins, Christopher },

title = {LangEye: Toward ‘Anytime’ Learner-Driven Vocabulary Learning From Real-World Objects},

booktitle = {Proceedings of the ACL 2025 BEA Workshop on Innovative Use of NLP for Educational Applications},

year = {2025}

}