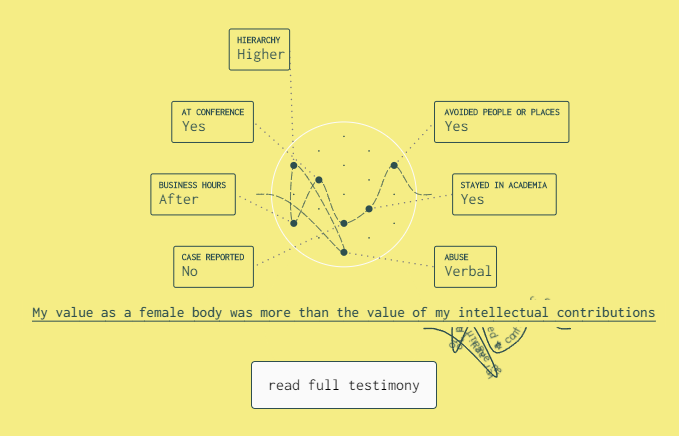

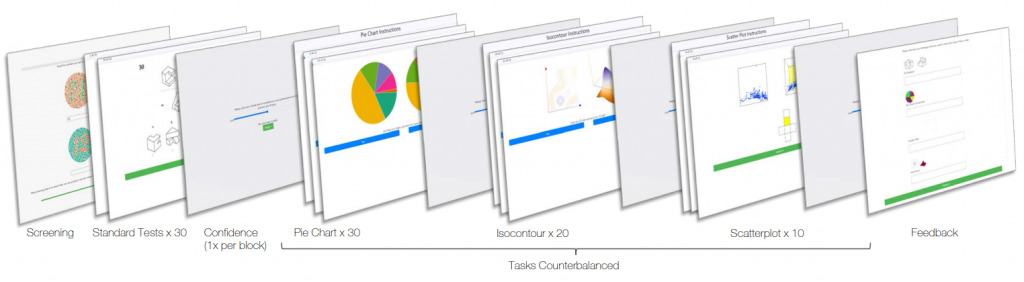

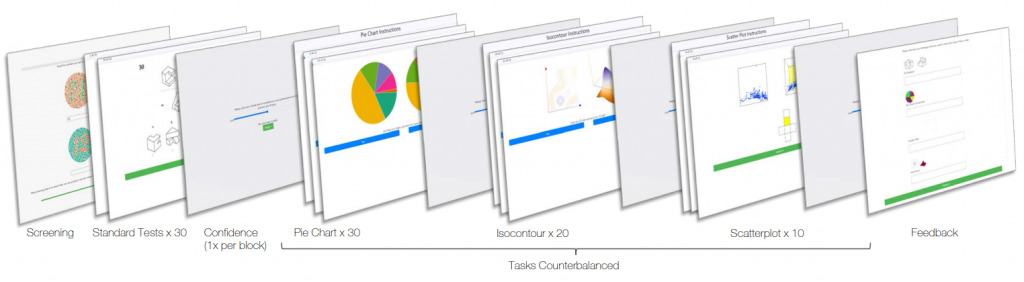

Problem-driven visualization work is rooted in deeply understanding the data, actors, processes, and workflows of a target domain. However, an individual’s personality traits and cognitive abilities may also influence visualization use. Diverse user needs and abilities raise natural questions for specificity in visualization design: Could individuals from different domains exhibit performance differences when using visualizations? Are any systematic variations related to their cognitive abilities? This study bridges domain-specific perspectives on visualization design with those provided by cognition and perception. We measure variations in visualization task performance across chemistry, computer science, and education, and relate these differences to variations in spatial ability. We conducted an online study with over 60 domain experts consisting of tasks related to pie charts, isocontour plots, and 3D scatterplots, and grounded by a well-documented spatial ability test. Task performance (correctness) varied with profession across more complex visualizations (isocontour plots and scatterplots), but not pie charts, a comparatively common visualization. We found that correctness correlates with spatial ability, and the professions differ in terms of spatial ability. These results indicate that domains differ not only in the specifics of their data and tasks, but also in terms of how effectively their constituent members engage with visualizations and their cognitive traits. Analyzing participants’ confidence and strategy comments suggests that focusing on performance neglects important nuances, such as differing approaches to engage with even common visualizations and potential skill transference. Our findings offer a fresh perspective on discipline-specific visualization with specific recommendations to help guide visualization design that celebrates the uniqueness of the disciplines and individuals we seek to serve.

Our featured blog post on this research paper can be found here.

Publications

[pods name="publication" id="8929" template="Publication Template (list item)" shortcodes=1]

Share on: [ss_social_share]